Cloud computing has innovated each industry in countless ways, but every technology has its limits without new tools to push it forward. Edge deployment brings processing power closer to data sources and end users, making it easier to support and enable emerging technologies with high processing and low latency requirements. According to a 2023 Accenture study, 28% of tactical firms looking to edge to solve a specific problem have partially or fully integrated edge computing into their cloud strategies. Integrators and Super Integrators, firms that have embraced edge computing over other emerging technologies, are far ahead, with 50% and 67% integration with cloud.

Edge computing is poised to enable cloud innovation for businesses looking to take the next step on their digital transformation journeys.

What is Edge Deployment?

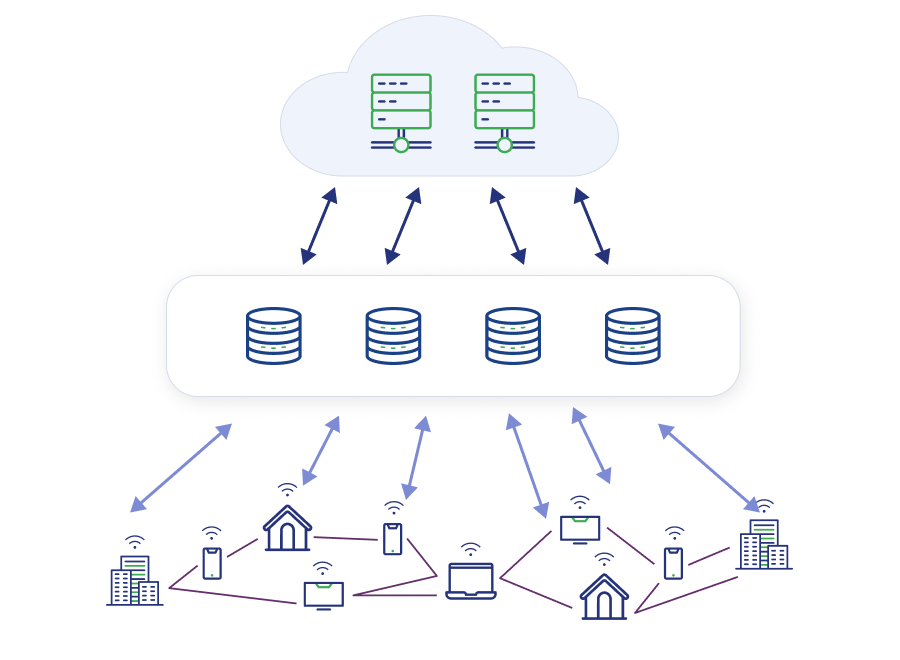

Instead of computing power being relegated to a centralized location, edge deployment processes data at the edge of the network, generally closer to the end users who are creating, receiving, and using data. Edge deployment can offer real-time, scalable benefits for businesses in many different industries.

How Edge and Cloud Computing Work Together

Cloud computing is being embraced by many organizations, particularly multicloud and hybrid cloud. According to Flexera, 87% of organizations are employing multicloud and 72% are using hybrid cloud architectures, combining legacy, on-premises frameworks with the cloud’s flexible computing capabilities. Edge deployments work in tandem with the cloud to allow for faster processing, improved responsiveness, and new innovative possibilities.

Benefits of Edge Deployments in Cloud Ecosystems

When edge deployments reside in cloud ecosystems, the business and end users can benefit from reduced latency, optimized bandwidth, improved scalability, better insights, and newly enabled possibilities.

Reduced Latency

Time-sensitive applications benefit greatly from real-time processing at the edge. For applications and devices that depend heavily on real-time feedback, including wearable devices, user experience improves when latency is at its lowest.

Optimized Bandwidth

While cloud computing can offer faster processing, if it’s done in a centralized location, businesses can run into bandwidth issues. Edge deployments can process data directly, leaving only small summaries and final results to be processed by the cloud. Cloud providers may also charge for data transfer. Offloading data processing to edge computing can reduce the amount of data transferred, correlating with cost savings.

Improved Scalability and Agility

By spreading tasks across edge devices, edge computing creates a more scalable architecture. If the number of devices and users suddenly increases, edge computing is well-equipped to handle increased workloads without putting a strain on a central system. Edge deployments also commonly use modular components, which can be added and removed quickly.

Better Analytics and Insights

Cloud computing improves the ability to collect, analyze, and build on gathered insights to improve real-time decision-making. Some businesses are more vulnerable than others to events and immediate changes. Edge computing can offer real-time analysis and insights that businesses need to react quickly, shifting gears in response to emerging data.

Edge computing can also help with the preprocessing side of data analysis, filtering and processing data at the edge before it is sent to central servers. This reduces bandwidth usage and can provide deeper, locally relevant takeaways.

Empowered New Applications

New application possibilities can be unlocked by edge computing that may have previously been either too clunky or outright impossible to run with traditional cloud models. Real-time industrial automation, remote patient monitoring, and immersive AR/VR experiences need to be aided by edge deployments.

Key Use Cases for Edge Deployment in the Cloud

The rise in cloud-based artificial intelligence and machine learning adoption, as well as the popularity of Internet of Things (IoT) devices in and out of the home, mean that the key use cases for edge deployment in the cloud are many and varied.

IoT and Wearable Devices

We have yet to reach the peak for IoT-connected devices. Statista predicts that by 2030, 29.42 billion IoT devices will be on the market worldwide, doubling the numbers from 2023. The consumer segment is expected to retain the majority of this share at 60%. These devices can include smart watches, programmable lights, smart speakers, and security systems. IoT devices can also help healthcare providers monitor patients from afar – processing at the edge means alerts can be sent more quickly to practitioners, giving them more time to make critical decisions for their patients.

Augmented Reality / Virtual Reality

Virtual reality and augmented reality headsets have the power to create truly immersive experiences, but this only works if the technology has little to no latency and the power to process complex visuals and data. Edge computing in AR/VR settings can help participants collaborate remotely, play games in engaging environments, and feel like they are in the room with others.

Autonomous Vehicles

Even a split second of latency for a self-driving car can mean disaster. Autonomous vehicles will need stellar 5G connectivity and edge computing to analyze sensor data in real time and react quickly to incoming traffic changes and potential obstacles.

Optimized Traffic Flow and Smart Cities

Have you ever been at a light for what feels like an eternity? Meanwhile, there’s almost no traffic coming from the other direction? Edge computing can optimize traffic flow by analyzing sensor data and changing lights accordingly. Smart city capabilities could include responding to events in real time and analyzing where more resources may be needed based on congestion patterns.

Predictive Maintenance

Edge computing and AI work together in predictive maintenance. Machinery at the edge, installed with sensors, can be read and interpreted by an algorithm that can predict when equipment needs repairs before it breaks down.

Personalized Retail Experiences

Personalized retail experiences also offer a perfect pairing for AI and edge computing. Shopper behavior can be collected at the edge, analyzed, and used to personalize in-store promotions and product recommendations.

Considerations for Effective Edge Deployments

Effective edge deployments don’t happen on their own. They can be made more successful by selecting the proper architecture and use cases, as well as paying attention to other intricacies involved with edge computing, including connectivity, in-house skills, data security, and other complexities.

Selecting the Right Architecture

Choosing the appropriate edge architecture is a fundamental decision in the success of any edge deployment. Three common architectures—cloud-based edge, on-premises edge, and hybrid edge—offer distinct advantages based on specific considerations.

- Cloud-based Edge: This is an ideal deployment when latency is not the primary concern, and centralized control is a priority. Cloud-based edge offers centralized management and control, making it suitable for applications where real-time responsiveness is not the highest priority.

- On-premises Edge: Reduces latency, making it a preferred choice for applications with stringent real-time requirements. Although on-premises edge lowers latency, it does increase the risk of attacks on the perimeter. With that, robust security measures are essential to mitigate potential vulnerabilities.

Carefully weighing the trade-offs between these architectures ensures that the chosen deployment aligns with specific needs, whether it’s prioritizing centralized control, minimizing latency, or finding a balanced hybrid solution.

Identify Suitable Use Cases for Edge

A suitable project for edge computing would be something that benefits significantly from low latency, reliable connectivity, and flexibility, particularly on the side of the end user. Businesses that have a central office with non-distributed workers and don’t produce devices or technology that are spread out in a wide area aren’t likely to need edge computing to operate effectively.

Organizations that have a robust remote workforce that relies on cloud applications or have customers who use devices that count on real-time insights, would be great use cases for edge computing.

Connectivity Considerations

New infrastructure and connectivity options are being driven by many factors, including changes in application demands, according to Gartner. The proliferation of Internet of Things (IoT) devices in manufacturing, healthcare, oil and gas, security, transportation, and other industries, paired with the explosion of generative AI, increases the appeal of processing data outside of a centralized data center or cloud. The continued expansion of these devices and technologies will increase demand for bandwidth, especially at the network edges.

Data center providers need to adapt their infrastructure and services to support the needs of different edge computing applications. For example, an energy company that collects and analyzes near-real-time data from customer devices needs a network latency of no more than 10 milliseconds. To achieve that, edge servers or edge data centers must be in each customer market.

Alternatively, a real-time application such as robot-assisted remote surgery or self-driving cars requires lower latency. Depending on the distribution of the robots/cars/devices, that might mean putting micro edge data centers in dozens of office buildings or bus shelters around the city, or hundreds embedded in traffic lights. This connectivity will also be heavily supported by 5G wireless networks to speed response times for mobile users and IoT devices.

Examine In-House IT Skills

A major challenge for many companies is finding staff with a sufficient understanding of edge computing and the IT skillsets needed to implement and manage it. Skills gaps are expected to be an ongoing problem for IT teams in 2024, and that goes extra for edge computing, which involves additional complexities and intricacies many team members may not have previously encountered.

Edge planning and deployment require a mix of skills that may be difficult to find in some markets. For example, the surveyed engineers listed required skills such as process or automation engineering, computer networking, cloud computing, cybersecurity, data engineering, data science, and application development. For the majority of organizations, the best path forward is to get help from IT consultants, edge data center providers, and managed services providers.

Data Security and Protection

Edge computing is often perceived as a security threat by IT teams. That belief stems, in part, because of the lack of security controls built into most IoT devices. That vulnerability is multiplied by the number of devices connected to the network.

One compromised device opens the door to other edge devices and the network as a whole. That can expose customer data and other confidential information. Edge devices need better security, starting with password management.

Many customers don’t bother to change the factory default passwords on their devices. Edge network equipment should be physically secured as well as monitored with network management software. Bringing in additional touchpoints needs to coincide with more stringent security measures.

Managing and Untangling Complexity

Managing remote edge servers and hundreds or thousands of connected devices is a major challenge, especially for IT departments accustomed to central data centers with on-site IT staff.

Two solutions are remote management and automation. Rules-based automation can keep devices operating without the need for constant human oversight. IoT management applications, such as the ones used for manufacturing devices or transportation fleet management, usually provide remote management and automation capabilities. Cloud and edge platform providers also offer remote device management. For example, Microsoft Azure’s IoT automatic device management provides tools for deploying and updating groups of devices.

Even automation and remote management may not be enough in some cases. Equipment eventually breaks, and it rarely does so on a regular schedule. Data center service providers are increasingly offering managed services for edge deployments. Few organizations can afford to station an IT employee at every remote edge server. Organizations should look for managed edge services and “remote hands” support services to help handle maintenance and management.

Data center facilities, colocation providers, and hyperscale cloud providers all offer different types of edge services, so it pays to study your options. For instance, an organization that wants to own and control its edge server, but not an entire edge facility might be well served by a colocation facility with shared rack space, power, cooling, and security. Another customer may not want to own or manage any edge equipment. In that case, data center services or hyperscale cloud providers may be the best option.

Finding the Right Balance with Edge and Cloud

Flocking to edge computing without a solid plan in place can mean implementation will fall flat, or that businesses will use more resources than necessary. Finding the right balance between the edge and the cloud is key. If you’re starting to weigh the merits of edge computing, but the execution is still fuzzy, TierPoint’s strategic guide to edge computing can offer clarity.

Did you know that by 2025, 85% of infrastructure strategies will integrate on-premises, colocation, cloud, and edge delivery options compared with 20% in 2020? But even with that knowledge on hand, you may be struggling to make a case for the costs associated with digital transformation. Download this eBook to discover must-have tips to help you communicate its features, benefits, and ROI to your leadership team.